Difference between revisions of "ThreadScope Tour/SparkOverview"

Jump to navigation

Jump to search

| (3 intermediate revisions by the same user not shown) | |||

| Line 9: | Line 9: | ||

= Spark viewer features = |

= Spark viewer features = |

||

| − | + | Review spark creation and creation rates: |

|

| − | [[Image:ThreadScope-spark- |

+ | [[Image:ThreadScope-spark-creation-conversion.png|300px|spark creation/conversion]] |

| + | Track the size of the spark pool: |

||

| ⚫ | |||

| + | |||

| + | [[Image:ThreadScope-spark-pool.png|300px|spark pool]] |

||

| + | |||

| + | See the distribution of sparks grouped by their sizes: |

||

| + | |||

| ⚫ | |||

= Things to look for = |

= Things to look for = |

||

| Line 22: | Line 28: | ||

#* spark pool hits empty |

#* spark pool hits empty |

||

#* low spark creation rate |

#* low spark creation rate |

||

| − | |||

# Too many sparks |

# Too many sparks |

||

#* overflow (red) is wasted work |

#* overflow (red) is wasted work |

||

#* can cause catastrophic loss of parallelism |

#* can cause catastrophic loss of parallelism |

||

| − | |||

# Too many duds or fizzled sparks (grey) |

# Too many duds or fizzled sparks (grey) |

||

# Too many sparks get GC'd (orange) |

# Too many sparks get GC'd (orange) |

||

# Sparks too small (overheads too high) |

# Sparks too small (overheads too high) |

||

| − | # Sparks too big (load balancing problems |

+ | # Sparks too big (load balancing problems, eg. sudoku2) |

| − | In the following sections we will walk through some examples of attempts to diagnose these problems. |

+ | In the [[ThreadScope_Tour/Spark|following sections]] we will walk through some examples of attempts to diagnose these problems. |

Latest revision as of 17:22, 7 December 2011

ThreadScope and sparks

ThreadScope 0.2.1 and higher come with spark event visualisations that help you to understand not just what behaviours your parallel program is exhibiting (eg. not using all cores) but why.

It helps to know a bit about sparks:

Spark viewer features

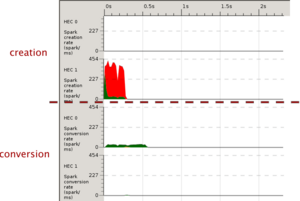

Review spark creation and creation rates:

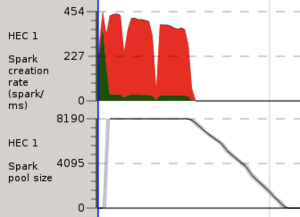

Track the size of the spark pool:

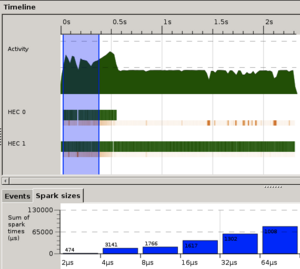

See the distribution of sparks grouped by their sizes:

Things to look for

The combination of features can be used to look for some common problems such as

- Too few sparks (not enough parallelism)

- spark pool hits empty

- low spark creation rate

- Too many sparks

- overflow (red) is wasted work

- can cause catastrophic loss of parallelism

- Too many duds or fizzled sparks (grey)

- Too many sparks get GC'd (orange)

- Sparks too small (overheads too high)

- Sparks too big (load balancing problems, eg. sudoku2)

In the following sections we will walk through some examples of attempts to diagnose these problems.